News

Memory depth and sampling rate in oscilloscopes

Oscilloscope have been a useful tool in many fields. It is not only served as its oscilloscope function, but it was also integrated with many other functions. This article helps us understand about oscilloscope by describing two main parameters: memory depth and sample rate and also their relationship. In addition, two parameters are important in assessing an oscilloscope.

At the first glance, the main purpose of oscilloscope is to display the characteristic of signal from the device or equipment of interest. In order to achieve that, the oscilloscope acquire the signal through the input port. The signal is amplified or attenuated if needed. After this stage, the signal is digitized by the analog to digital converter ADC and would be able to be stored in memory.

In a digital oscilloscope, storage is a key concept. Storage is only available if there is memory. Memory will always have a specific depth. In generally speaking, the more memory the better, but that is not always the case as we shall see.

One of the key parameters in an oscilloscope is the memory depth, and it can always be found in the specifications.

For example, the memory depth of a Keysight Technologies oscilloscope DSOS054A is 800 Mpts.

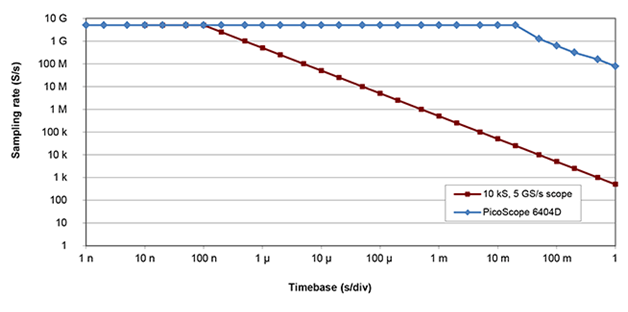

So what is the benefit of a large amount of acquisition memory? With more memory, it is possible to maintain a high sampling rate over a longer period of time. The higher sampling rate provides a better chance of finding a bad waveform. Therefore, the oscilloscope’s effective bandwidth is greater.

However, there is a downside to have a large memory depth. Under certain conditions it slows the oscilloscope. If the central processing unit cannot keep up with the demands of a deep memory, there will be more dead time. Dead time, alternatively regarded as update rate, is a measure of the time an oscilloscope needs to trigger, process the captured data, and finally make data visible in the display. Moreover, when debugging is needed, it is possibility that with high dead time, oscilloscope would be failure to capture an infrequent event. So in this case, a short update interval is highly desirable. Therefore, we can tell that deep memory can degrade scope performance.

To review, an important equation to keep in mind is:

Measurement duration = memory depth/sampling frequency.

Some scopes use special means to keep their sampling rate up while using a deep memory. They use hardware acceleration to sample faster than a typical 10 ksample scope at all timebases longer than 100 nsec/div.

Deeper memory enables a higher sampling rate. If the memory is large, it is possible to measure a longer signal. Because the larger memory will increase the update interval, however, the oscilloscope will become slower. The problem with this scenario is that important waveform events may be missed. Alternate triggering methods can mitigate this difficulty.

Memory depth is linked to sample rate, and that metric is of great concern to the instrument’s user. The relationship that relates memory depth to sample rate is

(Memory depth/time per division) x number of divisions = sample rate.

There is one more thing to remember is that a digital oscilloscope doesn’t always sample at its maximum sample rate. The accuracy with which the analog input signal is displayed depends upon the acquisition memory depth as opposed to the peak sample rate.

Another issue to bear in mind is that if the signal is to display realistically, the sample rate should exceed the signal’s highest frequency component. This Nyquist rate (formulated by Harry Nyquist in 1928) is the lower limit for signal sampling that will not be subject to aliasing. The problem is that when a continuous function is sampled at a constant rate, other functions also match the resulting sample set. By staying above the Nyquist rate, this harmful effect is mitigated.

The bottom line: Bigger is not always better when it comes to memory depth.

Source: RSI

Others

- TECOTEC GROUP ATTENDED SHIMADZU’S SERVICE MANAGER MEETING IN 2022

- TECOTEC HANDED OVER EDX-7000 X-RAY FLOURESCENCE SPECTROMETER AT NIDEC CHAUN CHOUNG VIETNAM

- INSTALLATION OF CHIP PROCESSING SYSTEM – LANNER/ GERMANY

- TECOTEC completed installation of EDX-LE Energy dispersive X-ray Fluorescence spectrometer at DYT Vina

- TECOTEC DELIVERED AND INSTALLED THE 2ND X-RAY FLUORESCENCE SPECTROMETER - EDX-LE PLUS AT TABUCHI

- TECOTEC Group has handed over PDA-7000 Optical Emissions Spectrometers for Nihon Plast Vietnam

- Bowman XRF Coating Measurement System For Electroless Nickel Plating

- TECOTEC DELIVERED AND INSTALLED SMX-2000 SYSTEM TO NIDEC TECHNO MOTOR VIETNAM